Last updated: 14 October, 2025

"Freedom of expression ends where harm begins—and AI must learn that boundary."

The rise of AI chat systems has transformed the way people interact with technology. From personal assistants and customer support bots to virtual tutors and companions, conversational AI now mediates billions of daily interactions. But as these systems grow in capability and reach, so too does the need to ensure safe, respectful, and compliant communication.

This is where content moderation comes in. It's the invisible but critical process that helps keep AI-driven conversations free from harmful, abusive, or misleading material.

In this article, we'll unpack how content moderation works in AI chat systems, exploring the techniques, challenges, and ethical principles that define this evolving field.

1. Why Content Moderation Matters in AI

Unlike traditional social media moderation—where humans review flagged posts—AI chat moderation operates in real time. The system must detect and respond to potentially harmful language within milliseconds, balancing freedom of expression with user protection.

⚠️ The Stakes

Unmoderated AI chat systems can:

- Generate or amplify hate speech, harassment, or discrimination.

- Spread misinformation or unsafe advice (e.g., medical or financial).

- Expose users—especially minors—to inappropriate or offensive content.

- Cause reputational and legal risks for companies deploying them.

Content moderation ensures conversations stay constructive, inclusive, and aligned with ethical standards and laws.

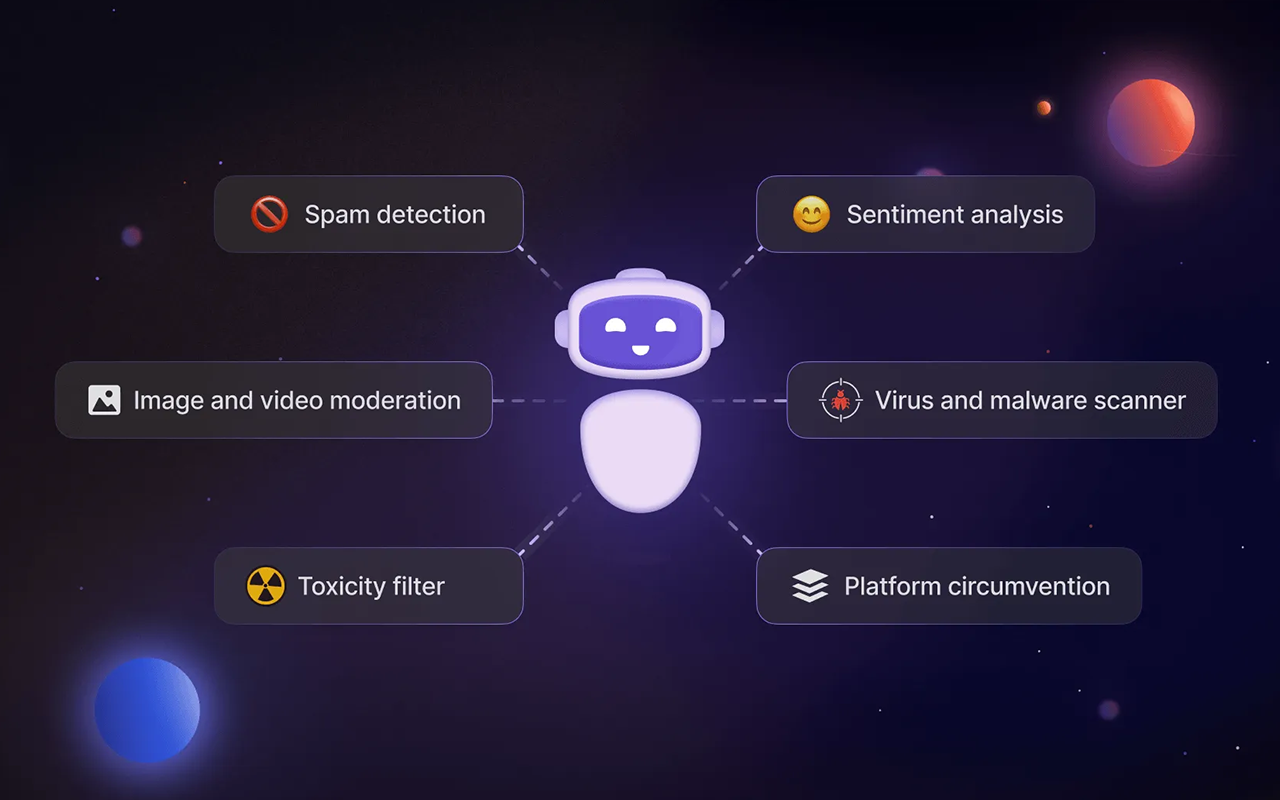

2. The Architecture of Moderation Systems

Modern moderation pipelines often combine multiple layers of filters, classifiers, and governance mechanisms.

🧠 A Typical Moderation Pipeline

- Input Analysis – The user's message is pre-processed and tokenized.

- Classification – The system runs the input through one or more trained classifiers (e.g., for toxicity, hate speech, self-harm).

- Action Routing – Based on risk level, the system chooses an

action:

- Allow and continue

- Warn or flag the message

- Block or filter the content

- Escalate to human moderators for review

- Feedback Loop – User or moderator feedback is logged and used to retrain models.

This layered architecture helps ensure speed, scalability, and adaptability in real-world deployments.

3. The AI Behind Moderation: Key Techniques

🔍 Machine Learning Classifiers

At the core are supervised ML models trained to detect specific categories of harmful content. Common classifiers include:

- Toxicity and abuse detection

- Sexually explicit or violent content detection

- Hate speech and discrimination

- Misinformation or conspiracy patterns

- Self-harm and suicide risk indicators

These models often use transformer-based architectures (like BERT or DistilBERT) fine-tuned on large, labeled datasets.

🧩 Rule-Based Filters

Complementing ML models are rule-based systems that enforce explicit policy boundaries.

Examples:

- Keyword blacklists for banned terms.

- Regex patterns for personally identifiable information (PII).

- Heuristic scoring for spam or solicitation attempts.

This hybrid approach—ML + rules—balances adaptability with precise control.

4. Human-in-the-Loop Moderation

Even the best AI models can't fully interpret context, intent, or cultural nuance. That's why leading platforms integrate human moderators into the loop.

👥 The Human Role

- Review edge cases where AI confidence is low.

- Refine and relabel training data for better model performance.

- Set and enforce ethical guidelines that evolve with user needs.

- Ensure culturally sensitive handling of topics like gender, religion, or politics.

AI accelerates moderation, but humans provide judgment and empathy—qualities machines still can't replicate.

5. Challenges in Moderating AI Conversations

1. Contextual Ambiguity

A single phrase can mean different things depending on context. For instance, "kill the lights" is harmless, but "kill yourself" isn't. AI struggles to discern intent without broader conversational awareness.

2. Bias in Training Data

Moderation models trained on biased data may over-police certain dialects or under-detect harmful speech in underrepresented languages. Ethical developers regularly test models for false positives and negatives to maintain fairness.

3. Dynamic Language

Slang, memes, and coded language evolve rapidly—often faster than datasets can be updated. Keeping up requires continual model retraining and linguistic monitoring.

4. Scalability vs. Accuracy

Real-time moderation for millions of users demands computational efficiency. Lightweight classifiers must operate without sacrificing accuracy, especially on mobile and edge devices.

6. Ethical Foundations of Content Moderation

⚖️ Balancing Expression and Safety

Content moderation sits at the intersection of free speech and harm prevention. Over-moderation can stifle creativity or discussion; under-moderation can cause harm or reputational damage.

The goal is proportional response—protecting users while preserving genuine, respectful dialogue.

🧭 Guiding Principles

Ethical frameworks such as the OECD AI Principles and UNESCO's AI Ethics Recommendations highlight key values:

- Transparency – Users should know when content is filtered or flagged.

- Accountability – Developers must be responsible for model outcomes.

- Fairness – Systems must treat all users equitably, across languages and cultures.

- Human Oversight – Automated moderation should never be entirely unsupervised.

7. Tools and Frameworks for Content Moderation

🧠 Popular APIs and Models

- OpenAI Moderation API – Pretrained classifiers for harmful and sensitive content.

- Perspective API (Google) – Detects toxicity and abusive language.

- AWS Comprehend – Custom NLP moderation and sentiment analysis.

- Microsoft Azure Content Safety – Flagging for sexual, violent, hate, and self-harm content.

- Hugging Face Transformers – Open-source models for custom moderation pipelines.

Developers often combine these tools with custom rulesets and dashboards for fine-tuned control.

8. Beyond Text: Moderating Multimodal AI

As AI systems move beyond text to voice, image, and video, moderation must evolve too.

- Speech Moderation – Uses automatic speech recognition (ASR) to transcribe and analyze voice input.

- Image Moderation – Detects explicit or violent visuals using CNN-based classifiers.

- Multimodal Context Understanding – Combines text, image, and tone cues to assess risk holistically.

The future of moderation is cross-modal, requiring unified governance across formats.

9. Designing Transparent Moderation Experiences

Users are more likely to trust chat systems that explain why a message was flagged or blocked.

🧩 Transparency Best Practices

- Show contextual warnings ("Your message was filtered because it may contain hate speech.")

- Offer appeals or feedback options.

- Provide clear content policies accessible from within the chat interface.

- Avoid shadow banning—let users know when moderation occurs.

Transparency transforms moderation from a black box into a collaborative safety process.

10. The Future of Responsible AI Moderation

As generative AI becomes ubiquitous, moderation will no longer be an afterthought—it will be a core design pillar.

Emerging trends include:

- Federated moderation, where models learn from local contexts without centralizing sensitive data.

- Adaptive moderation, adjusting thresholds based on user maturity or environment.

- Explainable AI (XAI) for transparency in why a model made a moderation decision.

- Ethical auditing frameworks to ensure continuous compliance and accountability.

Tomorrow's chatbots will not only be smarter—they'll also be safer, more empathetic, and more transparent.

🧭 Conclusion: Building Trust Through Safety

AI chat systems have the power to connect, educate, and empower millions. But trust is their foundation—and trust depends on safety.

Effective content moderation isn't censorship; it's careful curation of digital spaces where users can speak freely without fear of harm. By blending machine intelligence with human judgment and ethical design, developers can create conversational AI that respects both freedom and dignity.

The future of AI conversation is not about silencing voices—it's about ensuring every voice can be heard safely.

Key Takeaways

- Content moderation in AI chatbots safeguards users against harm, bias, and misinformation.

- Real-time pipelines combine ML classifiers, rule-based filters, and human oversight.

- Ethical moderation frameworks prioritize transparency, fairness, and accountability.

- The next generation of moderation will be adaptive, multimodal, and explainable.

- Ultimately, responsible AI is about earning trust through safety.